DevOps can modularize processes across the entire project from development to testing and deployment, including configuration changes, quality checks or dependency gathering. Not only does that reduce the risk of error, but it also allows the developers to spend more time to focus on writing code and delivering new digital services to the business’s.

A common issue that arises when using a Kubernetes Pod as a Jenkins slave is — how to empower developers with the ability to dynamically select the version of the Docker image for their Jenkins slave.

Modularize, Modularize, Modularize

In this post, I will describe the strategy we implemented to modularize the Kubernetes Pod as a Jenkins slave in complex production pipelines, and present the new Groovy pipeline code I developed to make it a reality.

An overview of the proposed solution

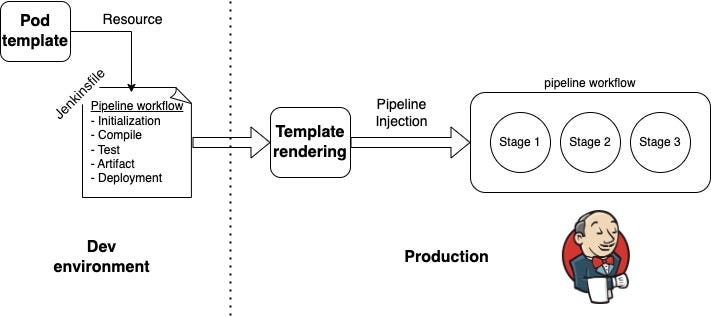

As shown in the diagram below, we have outlined our desired workflow while using a Kubernetes Pod template. We have implemented a simple approach that allows us to use a reusable and configurable template to drive Jenkins slave execution. The workflow is initiated by a template file and a corresponding configuration file (Jenkinsfile). These input files are then used to render the workflow, which is subsequently sent to Jenkins for execution.

By customizing the Jenkins slave for their specific workflow, a developer can greatly improve the efficiency and consistency of their projects. This allows to the developers to tailor the slave to their specific needs and optimize it for the tasks they will be performing.

The diagram illustrates the key components and their functionality in the pipeline workflow.

- The pipeline workflow outlines the sequence of steps that are executed in the pipeline.

- The Kubernetes pod template is used to define the dynamic resources that required for the pipeline.

- The template rendering component is responsible for replacing placeholders in the pod template with the pre-defined variables from the Jenkinsfile.

- Jenkinsfile is where the pipeline shared libraries and their associated environment variables are defined.

Pipeline Workflow

In our demonstration, we preinstalled the necessary Kubernetes plugin. We will use this plugin to manage pods, which are used to run applications and pipeline workflows. These pods, known as Jenkins slaves, are brought on-board with each build. As soon as your pipeline begins, it will pull the necessary image to run within the Kubernetes cluster.

As soon as your pipeline begins, it will automatically pull the necessary image to be used and executed within the Kubernetes cluster. To provide a comprehensive understanding of the entire setup, I propose walking through the pipeline and exploring its various functions in detail.

The following is a basic example of a pipeline:

https://gist.github.com/naturalett/de1208ff6a371c98d93d6dfba3a54715

Kubernetes Pod Template

The resources directory enables the use of the libraryResource step from external libraries to load related non-Groovy files. The libraryResource function returns a string, rather than a file, making it possible to load a YAML pod from resource files. In the following example, we will build a YAML file with optional lines based on a variable map and default variables that we want to pass.

https://gist.github.com/naturalett/bfce078a30d331508ab2b746b48df028

The above YAML code creates a pod which has multiple arguments that it expects to get from the developer:

- build_label

- node_version

- node_image_repository

- image_dependencies

Template Rendering Component

To properly use the above, there are a couple of steps to follow:

- Ensure that every variable used in the template is defined in the corresponding map (Jenkinsfile).

- Verify that the template, when rendered, produces a valid Kubernetes Yaml Pod.

- Utilize the StreamingTemplateEngine to render the template.

https://gist.github.com/naturalett/f53bb25e4889645a4111d9abbdcbc35a

Streamline Your Pipeline With Jenkins Shared Library

Our pipeline will follow the approach of a Jenkinsfile.

The Jenkinsfile will contain all the necessary code to call upon and make use of the functions and resources within the Shared Library structure.

(root)

+- src

| +- org

| +- foo

| +- sharedLibrary.groovy

+- vars

| +- renderTemplate.groovy

| +- commonPipeline.groovy

+- resources

| +- org

| +- foo

| +- k8s-pod-template.yaml

| +- aws-cli-yaml

Now, let’s take a look at the Jenkinsfile that we’ll be using in our pipeline:

https://gist.github.com/naturalett/301030c132aa43e173979477203dbd47

As we can see in the Jenkinsfile, we have defined the variables that are used in the template:

def template_vars = [

'build_label': 'datascience',

'node_version' :'fermium',

'image_dependencies' : [image_dependencies]

]

Next, we will utilize the render function that we have previously defined:

pod = renderTemplate(pod, template_vars)

Summary

As discussed in this post, developers now have the ability to customize the pod template in their Jenkins pipeline, providing greater flexibility in selecting and configuring the container images used in the Jenkins slave. This means that developers can tailor their pipeline to their specific needs, ensuring a streamlined and efficient workflow.

If you’re interested in further exploring different pipeline scenarios and learning practical production skills for implementing continuous integration in your own work, check out my course:

Hands-On Mastering DevOps — CI and Jenkins Container Pipelines