In our R&D team, as developers, we keep our source code stored on GitHub or Bitbucket. When we want to make improvements to the Infrastructure , we create a pull request. Following several rounds of reviews and comments, the changes get approved. Throughout this iterative process, Terraform commands come into play, executed either on your laptop or through an Automation Workstation.

Meet Atlantis, the game-changer tool designed to automate your IaC processes.

What we will cover:

- Why would you run Atlantis? — understand what triggers us to use Atlantis.

- Configuring Atlantis on ECS Using Terraform — Learn how we did it.

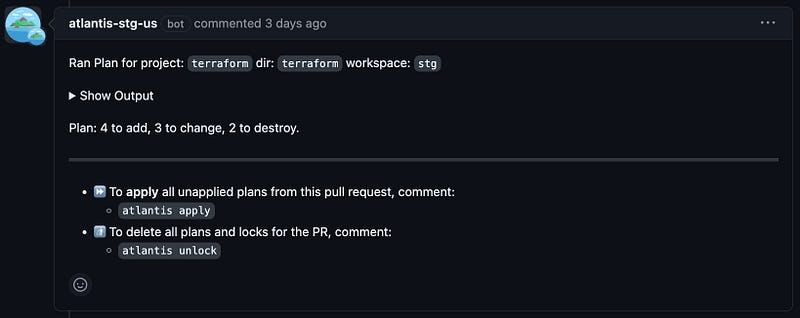

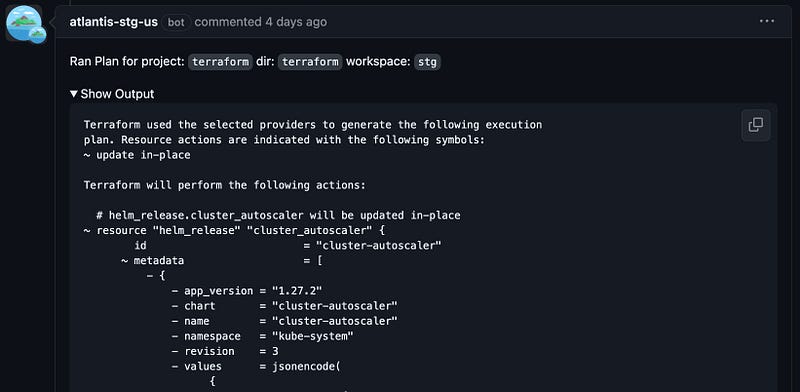

Atlantis not only runs the Terraform commands but also provides feedback by commenting on the pull requests with the output. This ensures that every team member is well-informed about the impending creations or deletions and who is behind each action.

Why would you run Atlantis?

Increased visibility

When individuals run Terraform on their own computers, determining the real-time status of your infrastructure becomes challenging.

- Is the deployment from the main branch in effect?

- Did someone omit to create a pull request for the latest change?

- What output resulted from the last Terraform apply?

With Atlantis, you see everything right on the pull request, giving you a clear view of the entire history of actions taken on your infrastructure.

Promote collaborative teamwork

While sharing Terraform credentials across your engineering organization may not be ideal, the good news is that anyone can initiate a Terraform pull request.

Elevate Your Terraform Pull Request Reviews

The crucial information of the Terraform Plan\Apply is automatically included in the pull request.

Configuring Atlantis on ECS Using Terraform

On the Atlantis website, various deployment methods, such as Kubernetes Helm Chart and Kubernetes Manifest, are available. We decided to deploy Atlantis using AWS Fargate to ensure its isolation from our other infrastructure resources. Consequently, Atlantis operates serverlessly outside our Kubernetes cluster.

module "atlantis" {

source = "terraform-aws-modules/atlantis/aws"

name = "atlantis"

# ECS Container Definition

atlantis = {

environment = [

]

secrets = [

]

}

}

The sidecar Datadog container

In our approach we took the Atlantis’ Terraform module and made minor adjustments to enhance the security of our module. Additionally, since we use Datadog for infrastructure monitoring, we’ve integrated a sidecar container, to operate alongside Atlantis.

module "atlantis" {

source = "terraform-aws-modules/atlantis/aws"

name = "atlantis"

# ECS Container Definition

atlantis = {

environment = [

]

secrets = [

]

}

# ECS Service

service = {

cpu = "1024"

memory = "2048"

container_definitions = {

datadog-agent = {

name = "datadog-agent"

image = "gcr.io/datadoghq/agent:7.46.0"

memory = "400"

cpu = "400"

environment = [

{

name = "ECS_FARGATE",

value = "true"

},

{

name = "DD_API_KEY",

value = data.aws_secretsmanager_secret_version.datadog_api_key_plaintext.secret_string

},

{

name = "DD_SITE",

value = "datadoghq.com"

},

{

name = "DD_PROMETHEUS_SCRAPE_ENABLED",

value = "true"

},

{

name = "DD_PROMETHEUS_SCRAPE_SERVICE_ENDPOINTS",

value = "true"

},

{

name = "DD_TAGS",

value = "env:${var.env} region:${var.region}"

}

]

readonly_root_filesystem = false

}

}

}

}

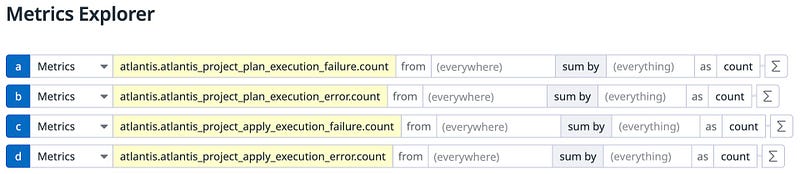

Prometheus and OpenMetrics collection

We want to gather metrics using Prometheus Autodiscovery, therfore we include specific tags in Datadog:

- DD_PROMETHEUS_SCRAPE_ENABLED

- DD_PROMETHEUS_SCRAPE_SERVICE_ENDPOINTS

This allows the Datadog agent to detect Prometheus annotations which will be included in the Atlantis:

docker_labels = {

"com.datadoghq.ad.instances" : "[{\"openmetrics_endpoint\": \"http://%%host%%:4141/metrics\", \"namespace\": \"atlantis\", \"metrics\": [\"atlantis_builder_execution_error\", \"atlantis_builder_execution_success\", \"atlantis_builder_projects\", \"atlantis_project_plan_execution_failure\", \"atlantis_project_plan_execution_error\", \"atlantis_project_apply_execution_error\", \"atlantis_project_apply_execution_failure\"]}]",

"com.datadoghq.ad.check_names" : "[\"openmetrics\"]",

"com.datadoghq.ad.init_configs" : "[{}]"

}

Don’t expose your secrets

The deployment of Atlantis will be from our local machine, which has access to our cloud. However, our Terraform module requires access to both GitHub and Datadog. In order to address this, we have securely stored the tokens in a secret manager.

data "aws_secretsmanager_secret_version" "github_token" {

secret_id = "/github/token"

}

data "aws_secretsmanager_secret_version" "github_token_plaintext" {

secret_id = "/github/token_plaintext"

}

data "aws_secretsmanager_secret_version" "datadog_api_key_plaintext" {

secret_id = "/datadog/api_key_plaintext"

}

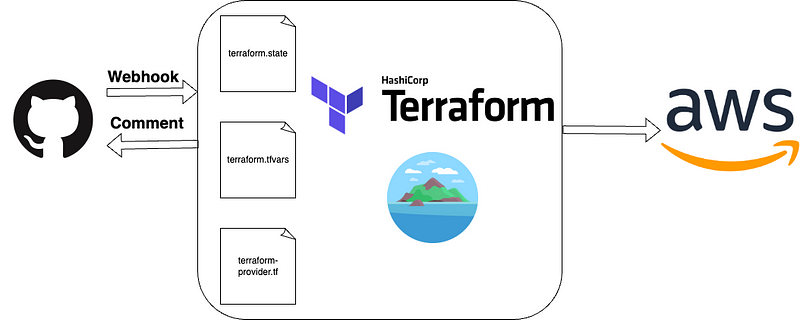

Setting up Webhook from you Github

To effectively respond to pull request events, Atlantis needs to receive webhooks from your GitHub.

webhook_list contains repositories that we want for Atlantis to integrate with.

resource "github_repository_webhook" "atlantis" {

count = var.create ? length(var.webhook_list) : 0

repository = var.webhook_list[count.index]

configuration {

url = "${module.atlantis.url}/events"

content_type = "application/json"

insecure_ssl = false

secret = random_password.webhook_secret.result

}

events = [

"issue_comment",

"pull_request",

"pull_request_review",

"pull_request_review_comment",

]

}

Pre Workflow Hooks

In Atlantis you can define pre-workflow hooks to execute scripts before default or custom workflows run. Pre-workflow hooks have distinct differences from custom workflows in several aspects. One of them do not require the repository configuration to be present. This can be used to dynamically generate repo configs.

In our configuration, we’ve created a server-atlantis.yaml file, which we associate during Atlantis deployment. The custom workflow logic is designed to:

- Identify the environment — from where Atlantis is operating

- Identify the branch name of the pull request

Our decision was to automatically execute a Terraform plan each time a pull request is created or a commit is pushed.

version: 3

...

...

projects:

- name: terraform

...

...

autoplan:

enabled: true

Given our setup with Atlantis per environment, we’ve chosen to run:

- Atlantis staging for pull requests named with feature/ originating from the develop branch

- Atlantis production for pull requests named with develop originating from the master branch

Explore the complete custom workflow here.

Sum it up

While establishing Atlantis on AWS ECS, we’ve not only improved our Infrastructure as Code (IaC) for a better visibility but also embraced direct feedback on pull requests, thereby promoting better collaboration within the team. The key aspect of this improvement lies in the automation process that Atlantis gives to our infrastructure.

You can find the full code of how to launch Atlatnis on ECS with our custom workflow here.

Are you looking to enhance your DevOps skills and learn how to implement continuous integration using Jenkins container pipelines? Join my course, where you’ll gain practical experience in various pipeline scenarios and master production skills. Take the first step towards becoming a DevOps expert today.

Hands-On Mastering DevOps — CI and Jenkins Container Pipelines

Questions or thoughts on your mind? Don’t hesitate to drop us a line at no-reply@top10devops.com. We’re ready to assist!